So I did a little upgrade project this weekend – went from a Dual-Core CPU workstation-class VMWare ESXi system running a pfSense VM with 512MB RAM & a SATA HDD plus 10/100Mb LAN, and moved to a Core i5 CPU workstation-class Proxmox hypervisor running the same version of pfSense with 2GB of RAM, SSD and gigabit NICs. The Core2Duo system had a 10/100Mb LAN card so the download speed was limited to 100Mb because of the hardware, not software, but I do believe the ping times can be attributed to the new hardware. Proxmox can be tricky to setup the NICs so I left notes on what I experienced below.

Proxmox Install notes:

3 NICs (one on board, and 2xintel NIC)

Initially I got my proxmox installed and running on my current network on a new workstation-class PC with just the on-board NIC connected. It picked up 10.0.10.175 from my dhcp server

On Proxmox I went to setup pfSense but prior to doing so I needed to bridge my NICs

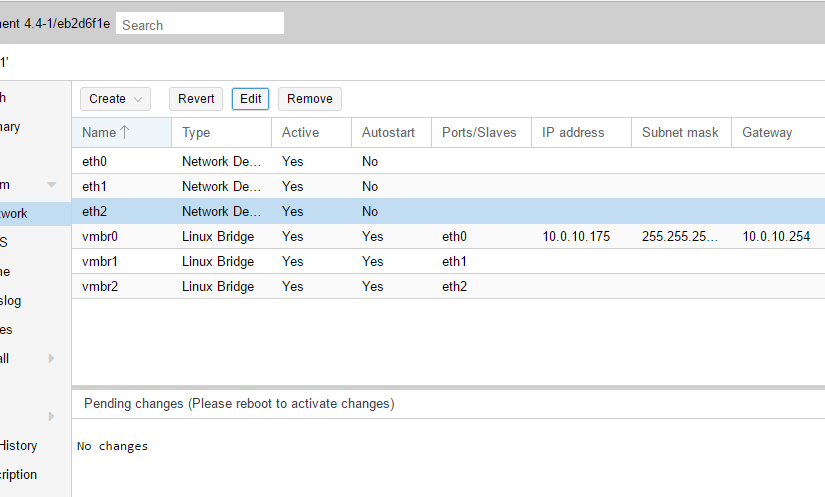

Here is my NIC setup after setting up the Linux bridge NICs:

When I initially setup the vm, I created pfsense pretty standard, then before starting the VM, I added System > Network > Create > Linux Bridge, and I chose the two other Intel NIC’s (did this twice, once for each NIC.

When I started the pfSense vm I got the error:

Task viewer: VM 101 - Start OutputStatus Stop bridge 'vmbr1' does not exist kvm: -netdev type=tap,id=net1,ifname=tap101i1,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown: network script /var/lib/qemu-server/pve-bridge failed with status 512 TASK ERROR: start failed: command '/usr/bin/kvm -id 101 -chardev 'socket,id=qmp,path=/var/run/qemu-server/101.qmp,server,nowait' -mon 'chardev=qmp,mode=control' -pidfile /var/run/qemu-server/101.pid -daemonize -smbios 'type=1,uuid=75940385-d64a-4fc8-b286-ade75fc08d52' -name pfsense2.x -smp '4,sockets=1,cores=4,maxcpus=4' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vga cirrus -vnc unix:/var/run/qemu-server/101.vnc,x509,password -cpu kvm64,+lahf_lm,+sep,+kvm_pv_unhalt,+kvm_pv_eoi,enforce -m 2048 -k en-us -device 'pci-bridge,id=pci.1,chassis_nr=1,bus=pci.0,addr=0x1e' -device 'pci-bridge,id=pci.2,chassis_nr=2,bus=pci.0,addr=0x1f' -device 'piix3-usb-uhci,id=uhci,bus=pci.0,addr=0x1.0x2' -device 'usb-tablet,id=tablet,bus=uhci.0,port=1' -device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:6148cfb1fd55' -drive 'file=/dev/pve/vm-101-disk-1,if=none,id=drive-ide0,format=raw,cache=none,aio=native,detect-zeroes=on' -device 'ide-hd,bus=ide.0,unit=0,drive=drive-ide0,id=ide0,bootindex=100' -drive 'file=/var/lib/vz/template/iso/pfSense-CE-2.3.3-RELEASE-amd64.iso,if=none,id=drive-ide2,media=cdrom,aio=threads' -device 'ide-cd,bus=ide.1,unit=0,drive=drive-ide2,id=ide2,bootindex=200' -netdev 'type=tap,id=net0,ifname=tap101i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown' -device 'e1000,mac=C2:8E:F1:2E:83:E5,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=300' -netdev 'type=tap,id=net1,ifname=tap101i1,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown' -device 'e1000,mac=CE:AE:FA:44:EF:13,netdev=net1,bus=pci.0,addr=0x13,id=net1,bootindex=301' -netdev 'type=tap,id=net2,ifname=tap101i2,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown' -device 'e1000,mac=D2:09:7A:FC:6D:95,netdev=net2,bus=pci.0,addr=0x14,id=net2,bootindex=302'' failed: exit code 1

So to fix this I first destroyed my initial vm 100 in the proxmox console with

qm destroy 100

Next with the info I found here: https://forum.proxmox.com/threads/cant-start-vms.13824/

It seems the Proxmox underlying debian OS didn’t know about my other NICs:

I ssh’d into the new server with putty and edited the interfaces file:

Nano /etc/network/interfaces

and changed this config:

auto vmbr0 iface vmbr0 inet static address 10.0.10.175 netmask 255.255.255.0 gateway 10.0.10.254 bridge_ports eth0 bridge_stp off bridge_fd 0

To this:

auto vmbr0 iface vmbr0 inet static address 10.0.10.175 netmask 255.255.255.0 gateway 10.0.10.254 bridge_ports eth0 bridge_stp off bridge_fd 0 auto vmbr1 iface vmbr1 inet dhcp auto vmbr2 iface vmbr2 inet dhcp

Then I had proxmox reboot by issuing the command:

reboot

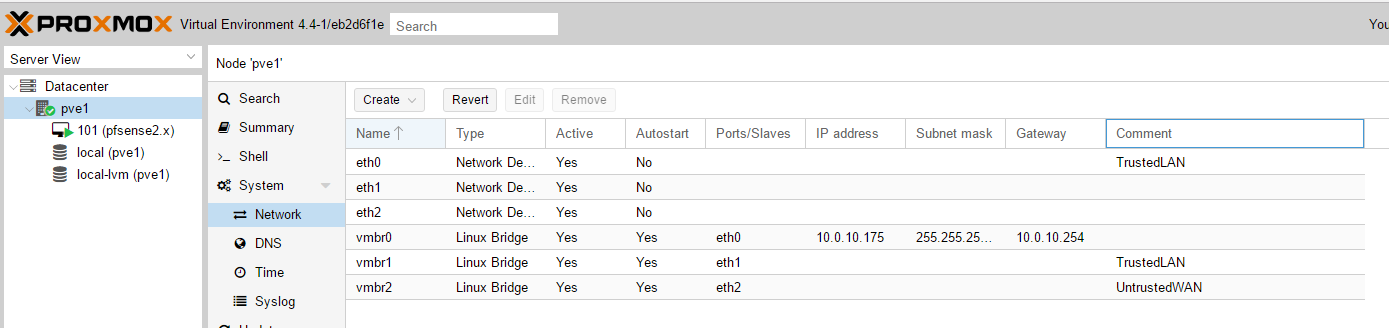

And my interfaces file ended up looking like this:

auto lo iface lo inet loopback iface eth0 inet manual #TrustedLAN iface eth1 inet manual iface eth2 inet manual auto vmbr0 iface vmbr0 inet static address 10.0.10.175 netmask 255.255.255.0 gateway 10.0.10.254 bridge_ports eth0 bridge_stp off bridge_fd 0 auto vmbr1 iface vmbr1 inet manual bridge_ports eth1 bridge_stp off bridge_fd 0 #TrustedLAN auto vmbr2 iface vmbr2 inet manual bridge_ports eth2 bridge_stp off bridge_fd 0 #UntrustedWAN

I could now start the pfsense vm and the pfsense install now recognized my network cards <smiles>

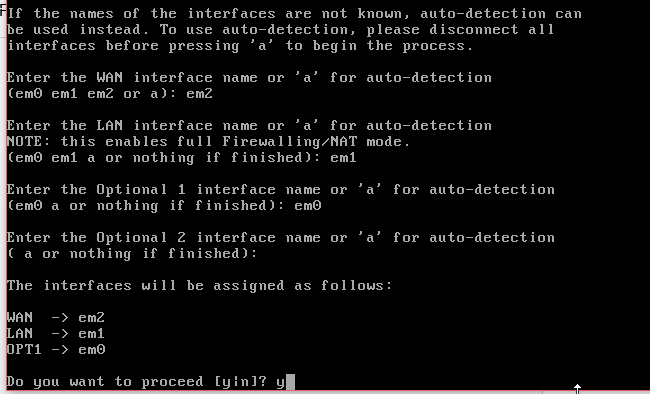

In the pfsense setup I choose 1) and I am offered the following options:

With a little bit of guessing and using my laptop to find the LAN, I was able to get up and connected into my pfSense web console. From there, reset the power to my cable modem, and got a new Cox IP address.

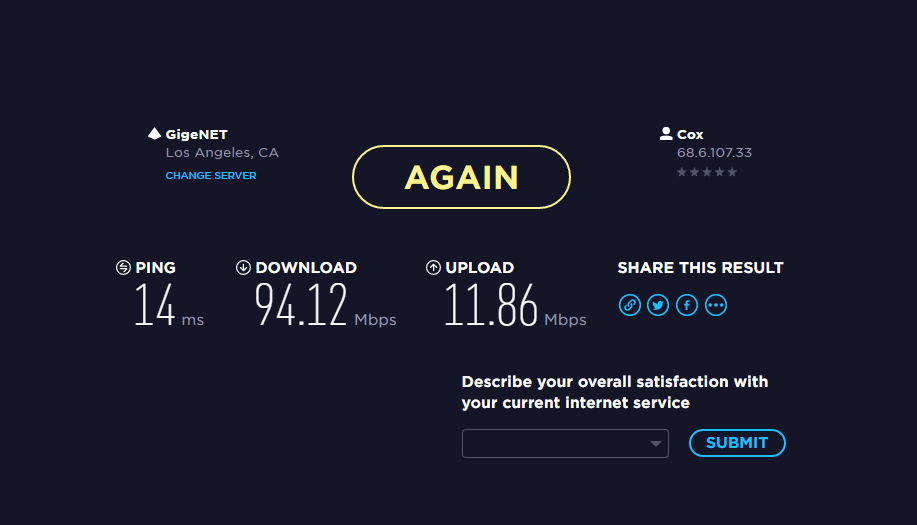

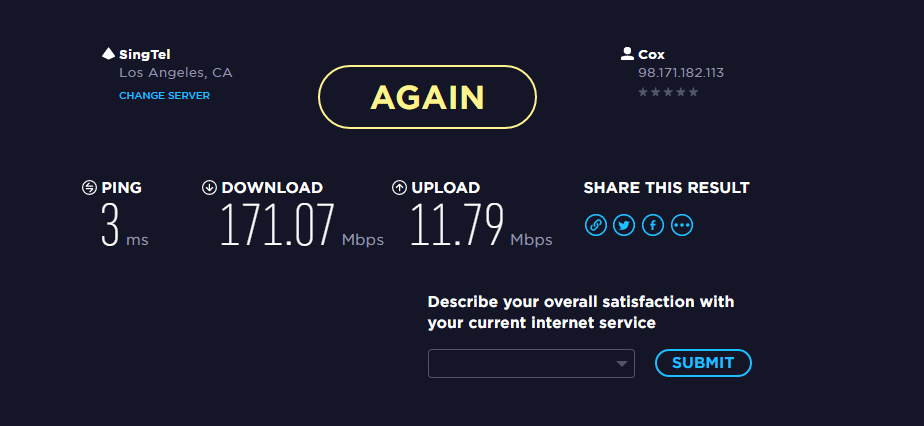

The change in speeds was actually pretty remarkable.

Here are the speedtest.net results with the old Dual Core (Core2Duo) with an ESXi VM on a SATA HDD 512MB of RAM and 10/100 LAN:

And here are my speedtest.net results with a core i5 4-core Proxmox VM on an SSD, 2GB of RAM, and Gigabit NICs:

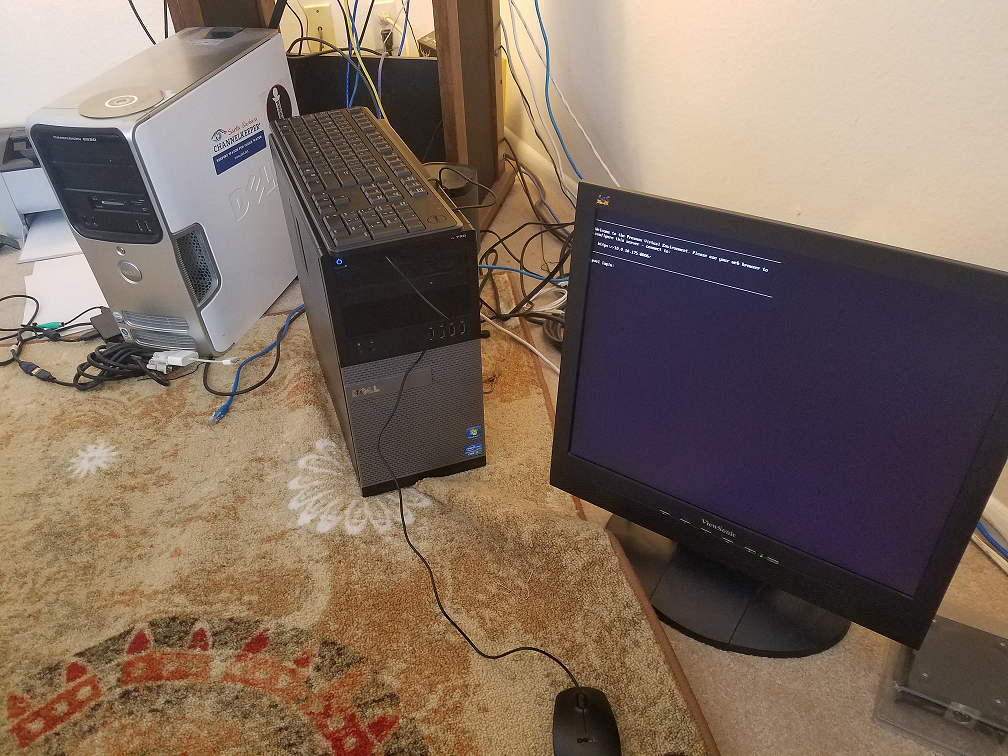

Below is an image of the old server on the left and a new server on the right.

VMWare is still running on the old server and I may keep it around, but also considering moving my domain controller & ISC DHCP server off of it and re-building it as another Proxmox VME as a cluster, but I’ve read that it’s best to have 3 servers for a Proxmox cluster.

All in all I’m pretty happy with the results of upgrading my home pfSense firewall from ESXi to Proxmox, and I hope this post helps someone with their Proxmox setup.