How to Create and Build a Security Profile for Your Network on a Budget – Part 1

Start with Building a Foundation (or use an existing good one).

Credit to Kyle Bubp & irongeek.com: http://www.irongeek.com/i.php?page=videos/bsidescleveland2017/bsides-cleveland-102-blue-teamin-on-a-budget-of-zero-kyle-bubp

Use a Base Framework for your security project. There are a lot of standards available and the NIST government standards are a good solid foundation:

- NIST 800-53

- NIST Cybersecurity Framework

- NIST CSF Tool

- CIS Critical Security Controls

- NIST-CSF tool – this is a nice visual tool – graphical interface for the stages of building a security program

Document everything

A core documentation repository is critical when setting up a security project – others will follow you and will need to look up the information you have recorded. It’s best to have a security incident response ticketing system and documentation before you need it. Have these tools up and ready.

For policy, procedure, how-tos, etc:

- MediaWiki(free)

- Atlassian Confluence ($10 for 10 users) – glyfee plugin for confluence

- OneNote/SharePoint – not every company is entirely open source

Incident Response Ticketing/Documentation systems:

- RTIR (https://bestpractical.com/download-page)

- The Hive (https://thehive-project.org) – easier to install

Map out your entire network

- NetDB – Uses ARP tables and MAC databases on your network gear. (use a service account and NetDB will use ssh/telnet to find every device connected, will give a nice http interface. You can setup a cron job that will scan NetDB database every hour. You can pipe new device connections to an email address. Knowing if something comes onto your network is critical.

.ova is available at https://www.kylebubp.com/files/netdb.ova

Supports the following: Cisco, Palo Alto, JunoOS, Aruba, Dell Powerconnect

- nmap scans + ndiff/yandiff – not just for red teams; export results, diff for changes. Alert if something changed.

- NetDisco

https://sourceforge.net/projects/netdisco – uses SNMP to inventory your network devices.

- Map your network – create a Visio document and have a good network map.

Visibility

Facebook-developed osquery and this tool can give you all you need.

Agents for MacOS, Windows, Linux

Deploy across your enterprise w/ Chef, Puppet, or SCCM

Do fun things like search for IoC’s (FBI file hashes, processes) – pipe the data into ElasticStack for visibility & search-ability

- SpiderFoot: http://www.spiderfoot.net/info/ – automated reconnaisance that provides a rich set of data to pinpoint areas of focus.

User Data Discovery

OpenDLP – (github) or (download an .ova) – will scan file shares and using a normal user account you can scan for available shares and data. Run over the weekend and see what you can find. Find the data owners and determine where the data should reside.

Hardening Your Network

CIS Benchmarks – Center for Internet Security Benchmarks: 100+ configuration guidelines for various technology groups to safeguard systems against today’s evolving cyber threats.

Out of the box, windows 10 is 22% for the CIS benchmark.

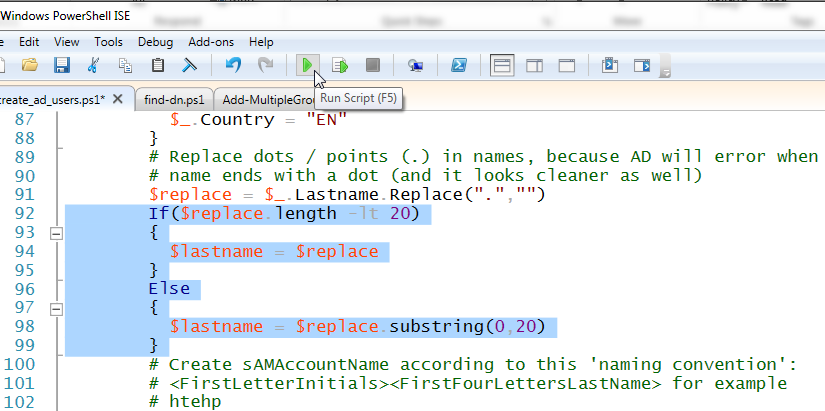

It’s difficult to secure your network if everything is a snowflake. While not exciting, configuration management is important. Deploy configs across your org using tools like GPO, Chef, or Puppet.

Change management is also important – use git repo for trackign changes to your config scripts.

Safety vs. Risk

Scanning for Vulnerabilities:

OpenVAS (greenbone) is a fork of Nessus which is still maintained, is the default vulnerability scanner in AlienVault. It does a great job in comparison with commercial products. Be careful, do some safe scans first and it’s not recommended to scan critical life-support equipment for example in a hospital.

Scan web apps:

Arachni Framework – for finding bugs in your developer’s code

OWASP ZAP (Zed Attack Proxy)

Nikto2 (Server config scanner)

Portswigger Burp Suite (not free – $350)

Harden your web servers:

Fail2ban – python-based IPS that runs off of Apache Logs

ModSecurity – Open source WAF for Apache & IIS