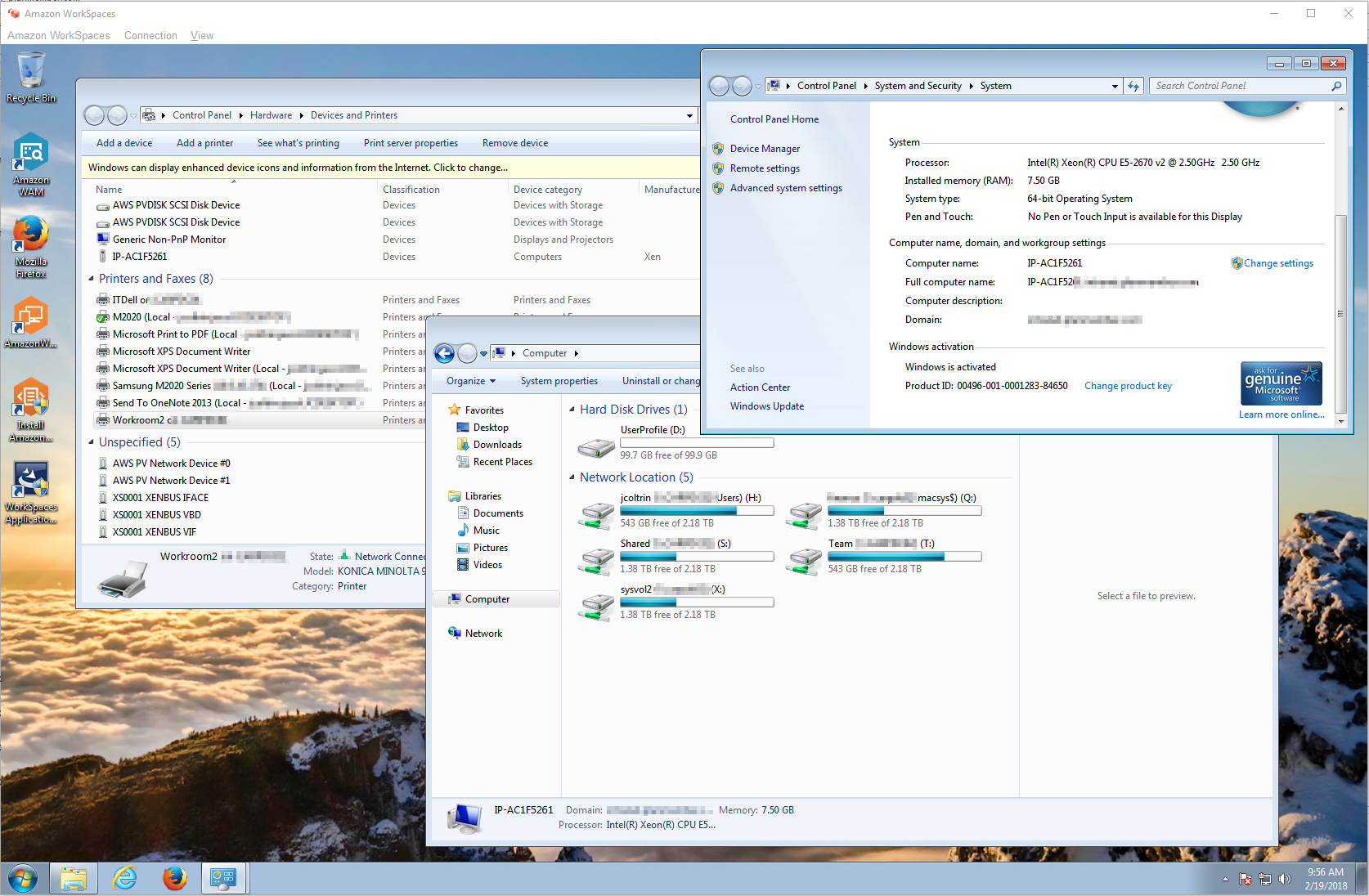

I ran into trouble when trying to clone a new Optiplex 7050. My normal procedure for cloning with clonezilla required a little tweaking to accommodate Windows 10, UEFI, NVME M.2, Secure Boot, and RAID On. Follow the procedure below to clone your systems on these newer hard drives and BIOS versions.

As a side thought, I enjoy using Clonezilla and have used it for many years. I love the convenience of it and not having to manage Windows images with something like SCCM. While SCCM has a place in some organizations, I believe it’s perfectly fine to use Clonezilla to create OS images of different models of computers. I have approx 15 different OS images; everything from Lenovo laptops to Dell Optiplex 380’s to Optiplex 7050’s.

Requirements:

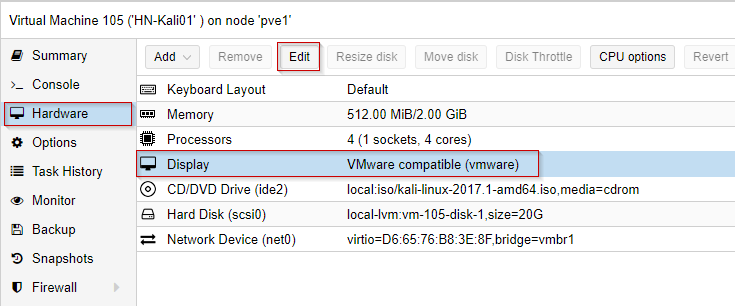

- 1 x USB 2.0 or 3.0 USB thumb drive min 2GB capacity for the clonezilla bootable USB drive made bootable to 20170905-zesty version of clonezilla

- 1 x USB 3.0 USB External HDD with a minimum HDD size that is larger than the TOTAL size of your M.2 NVME HDD. (I use a 4 TB Western Digital My Passport) – In my previous experience with Clonezilla, it has created images only writing images of the Used Space on the Source HDD, in this case with UEFI / NVME HDD’s, the image created on disk is the total size of the NVME drive.

- 2 x Dell Optiplex 7050 (Source and Target) computers

- 1 x Separate PC or laptop you can use to create a bootable USB Clonezilla Thumb Drive

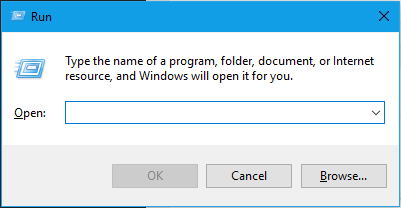

1. Configure your Source Windows 10 Dell Optiplex 7050 machine as necessary. Install all applications, create user accounts, and uninstall bloatware. Make sure you create an administrator user account and password. In final preparation for cloning, either run Sysprep (found in C:\Windows\System32\Sysprep), or alternatively ensure you shut down Windows 10 completely by creating a Shutdown /s /t 0 shortcut and executing it.

2. On a separate PC, download Rufus which we’ll use to create a bootable USB thumb drive.

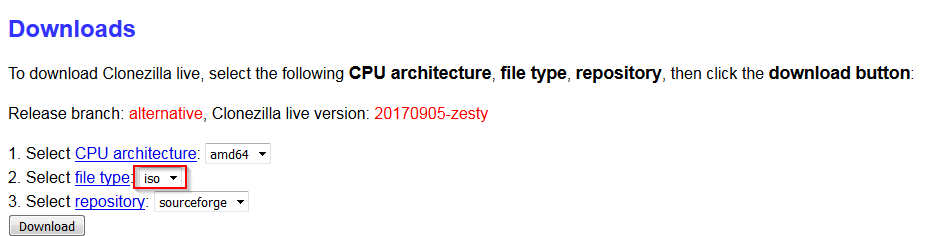

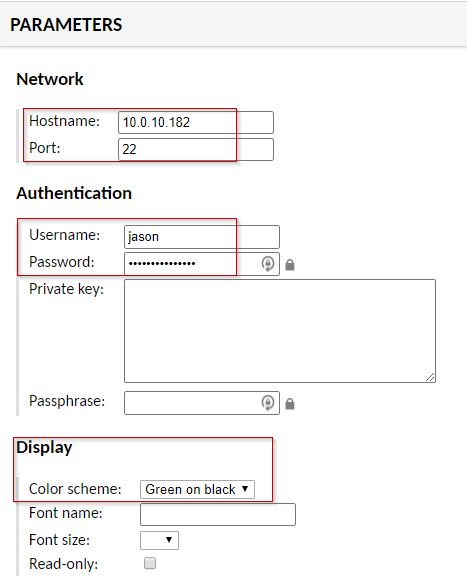

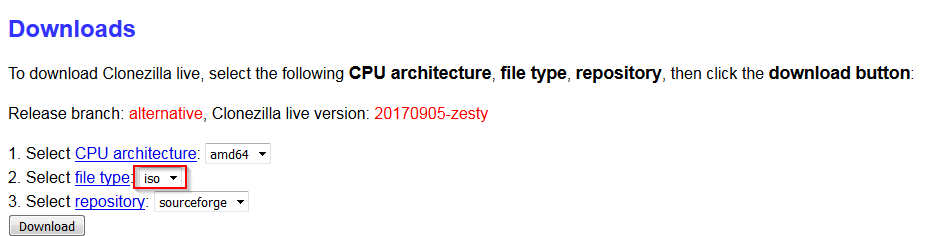

3. On a separate PC, download the AMD64 version of alternative (Ubuntu-based) as outlined on the Clonezilla website (this version is required for newer BIOS’):

4. Change the file type to ISO and hit Download.

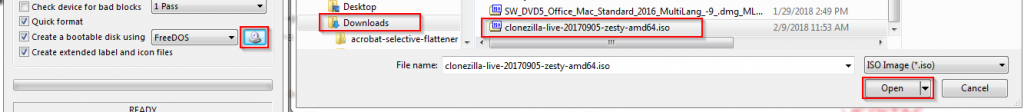

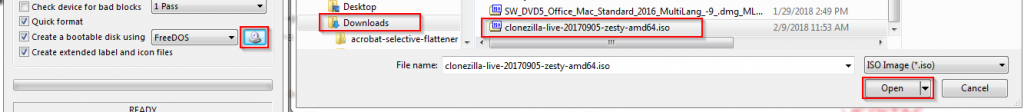

5. Attach your USB thumb drive into your separate computer, run Rufus, tell Rufus to use the drive you just attached under Device, point Rufus ” to the .iso file you just downloaded.

6. Hit Start and the bootable USB thumb drive with Clonezilla will be created.

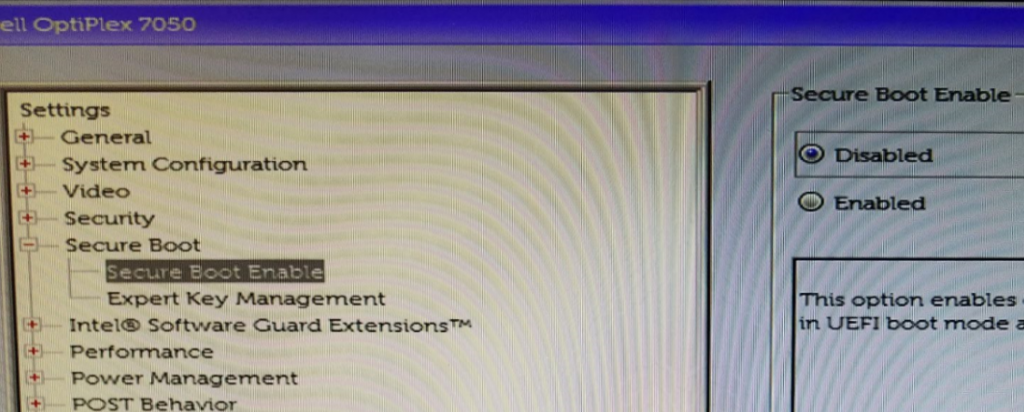

7. On the Source computer, insert the USB thumb drive into one of the front panel’s top (black) USB ports, and insert the USB External HDD separately into the Blue USB 3.0 port. Attach the keyboard, mouse, power, and monitor.

8. Power on the Source computer and start mashing the F12 key on the keyboard to get to the one-time boot menu.

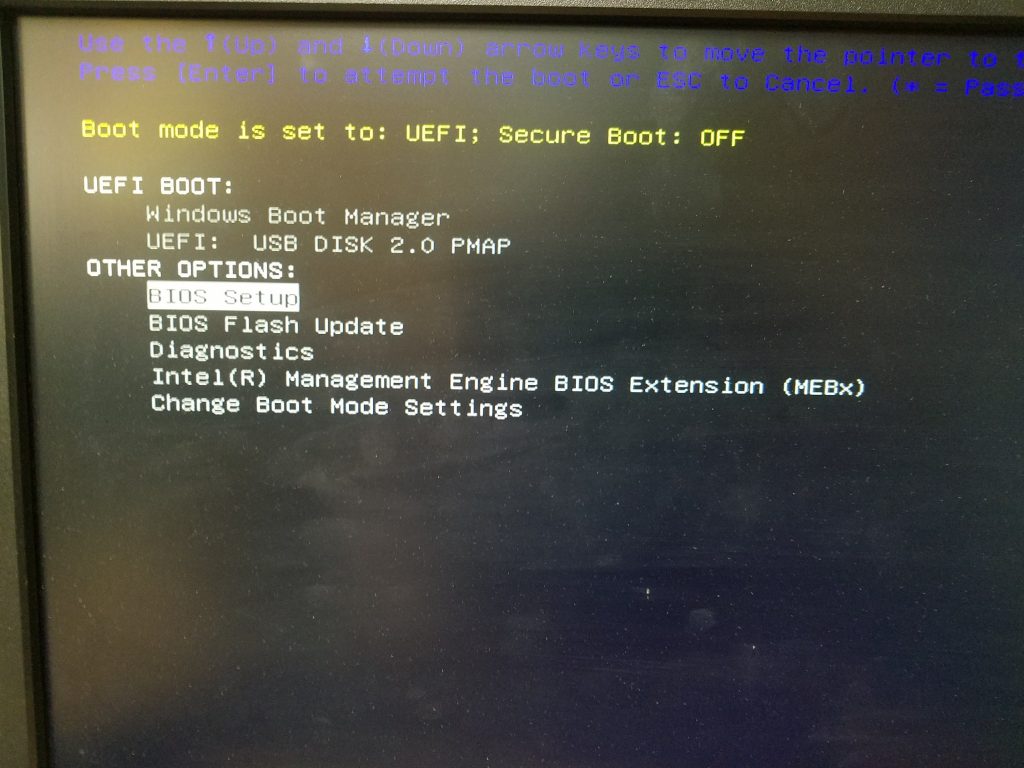

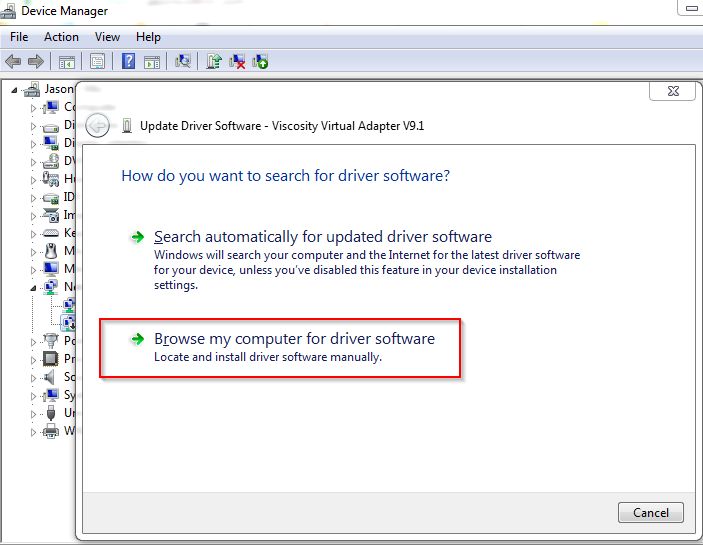

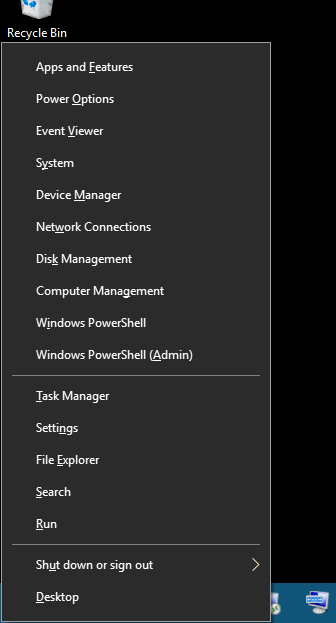

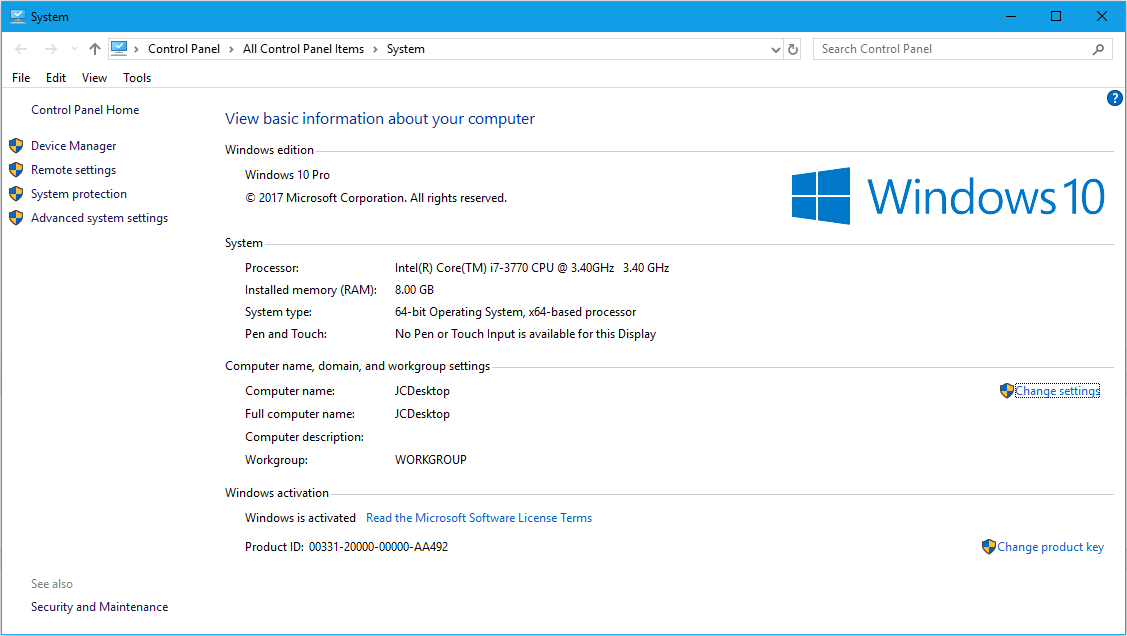

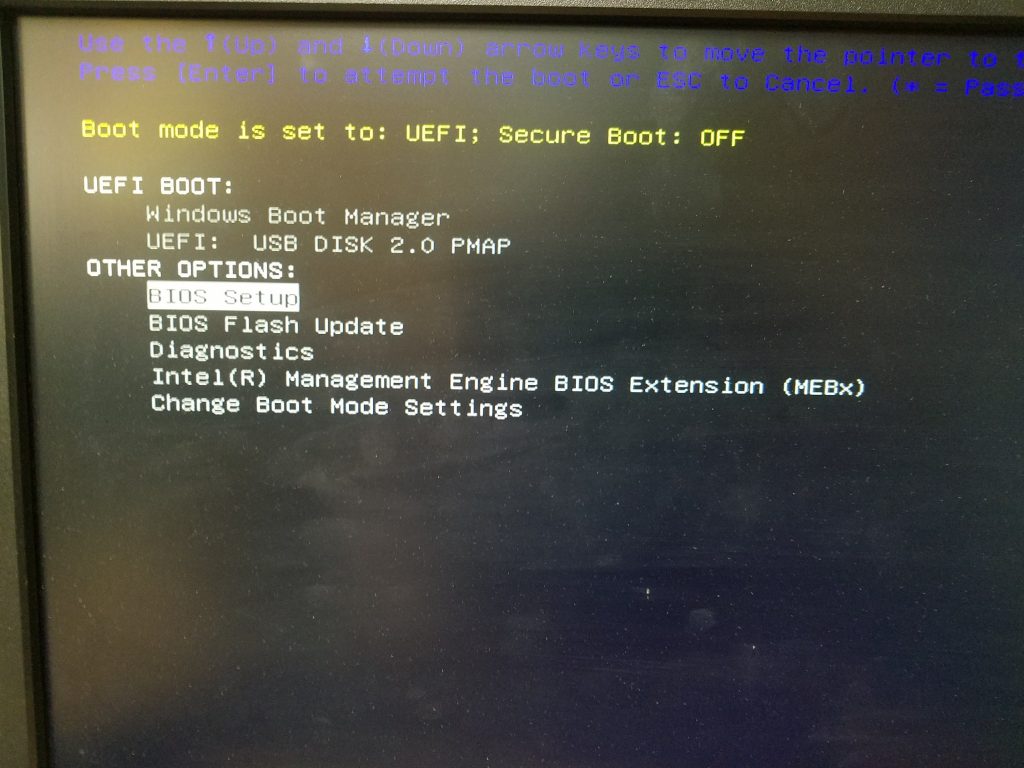

9. Before we begin, we need to make sure clonezilla can find our NVME HDD. By default UEFI and Secure Boot will be enabled. We need to disable these as well as Boot Path Security so that we can continue.

10. Select Setup from the Boot Menu:

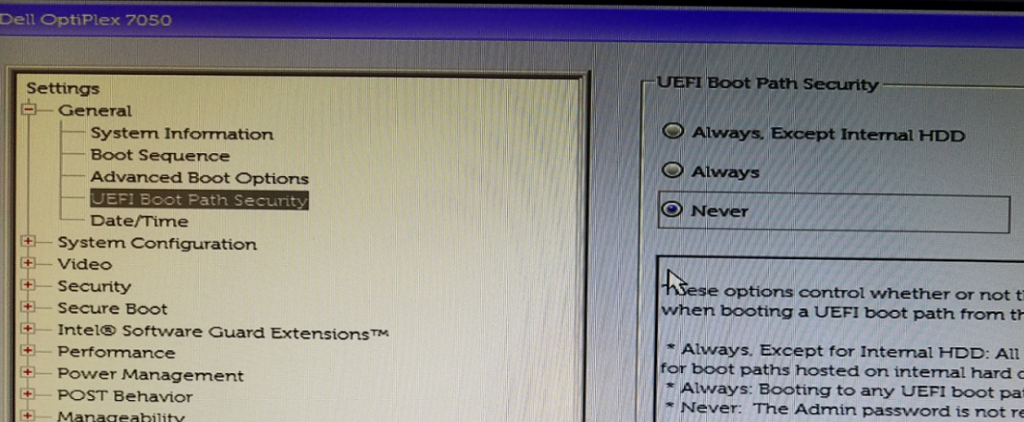

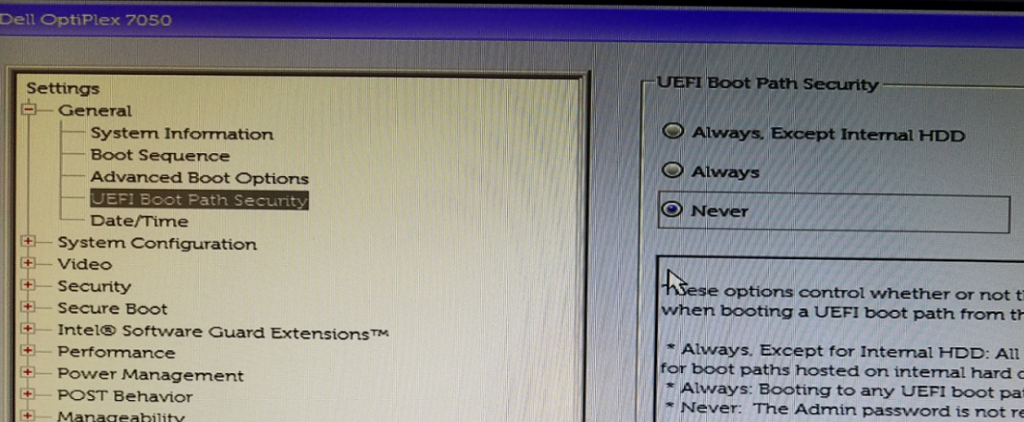

11. In the BIOS, under the General Heading, select UEFI Boot Path Security and change it from Always to Never.

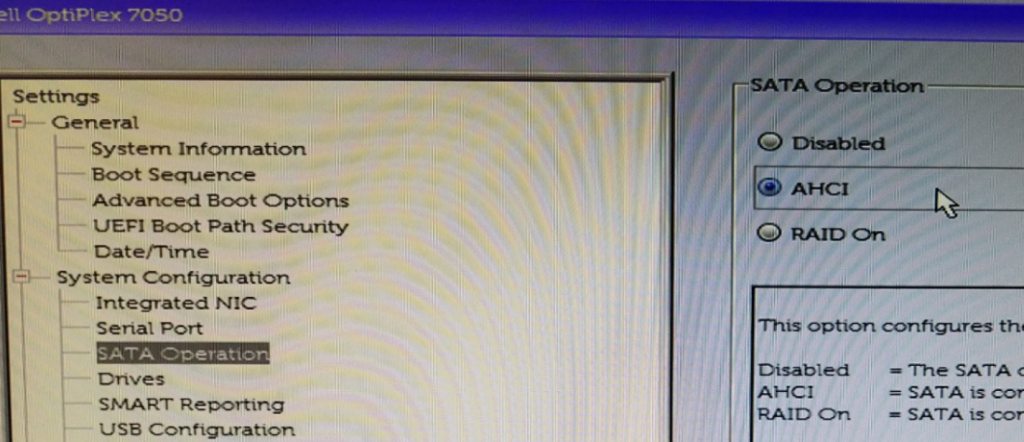

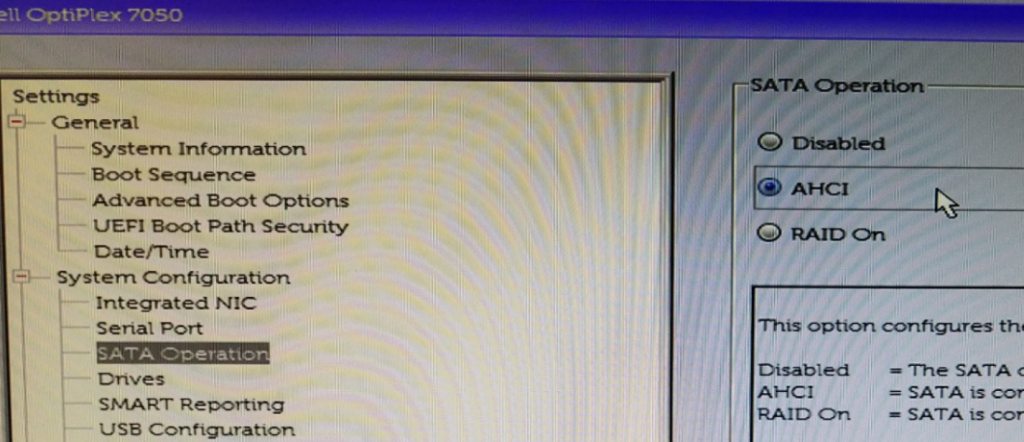

12. Next change System Configuration > SATA Operation from RAID On to AHCI

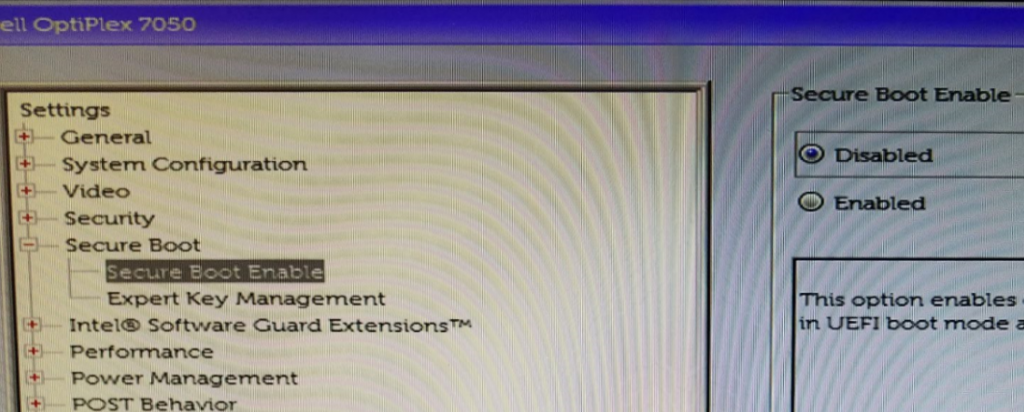

13. Lastly, change Secure Boot > Secure Boot Enable “Enabled” to “Disabled”

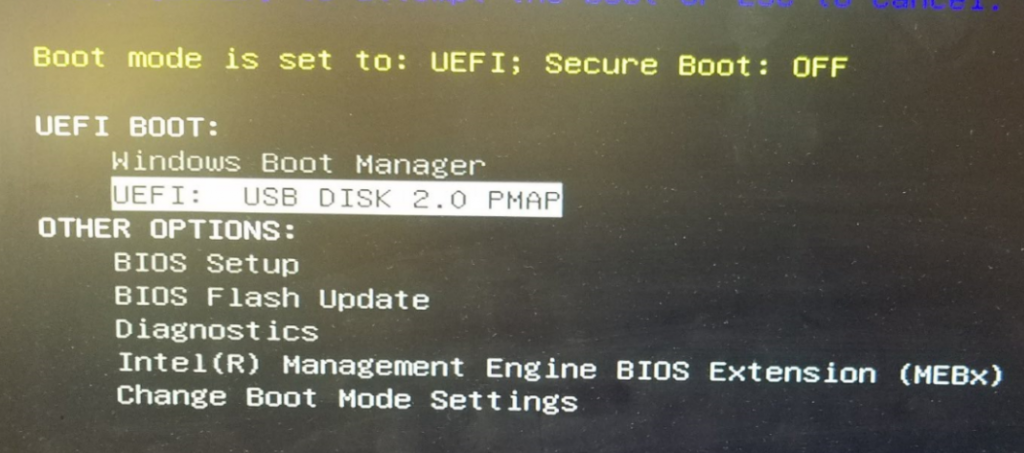

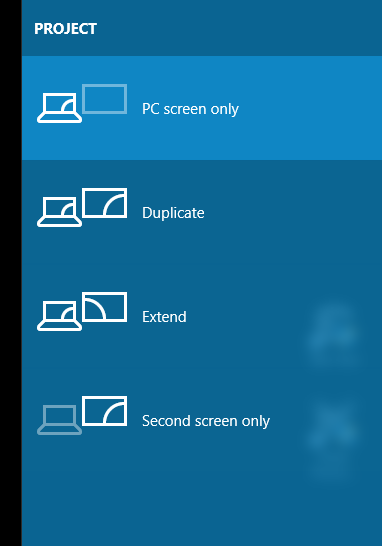

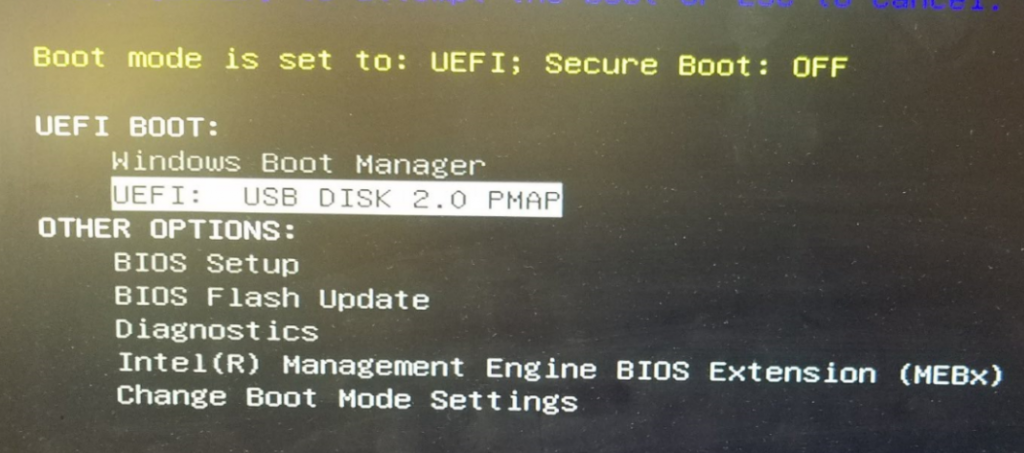

Apply, Save and Exit the BIOS. On the next boot, start mashing the F12 key again and this time select UEFI: USB DISK 2.0 PMAP

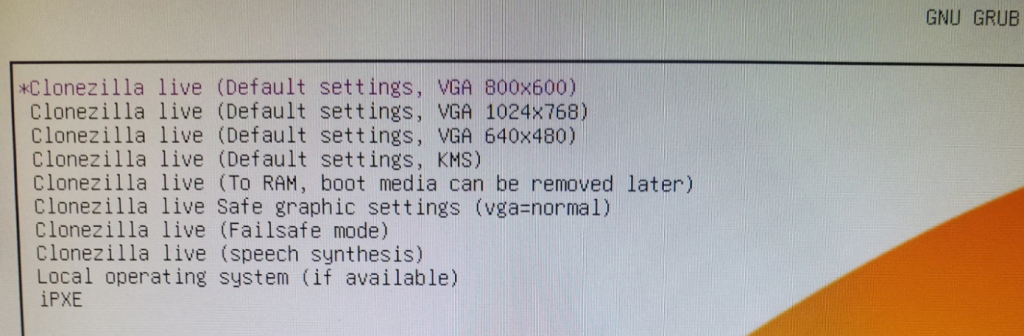

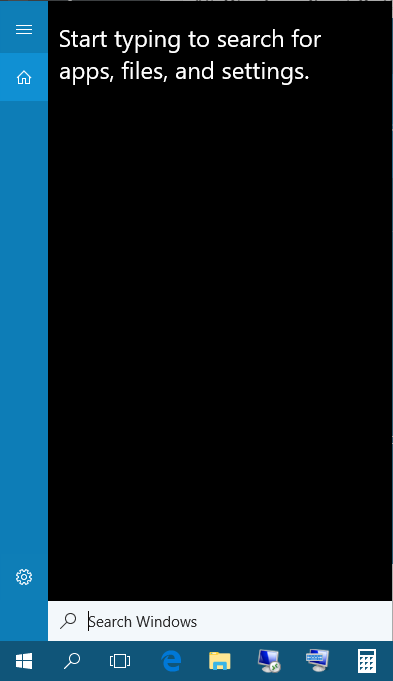

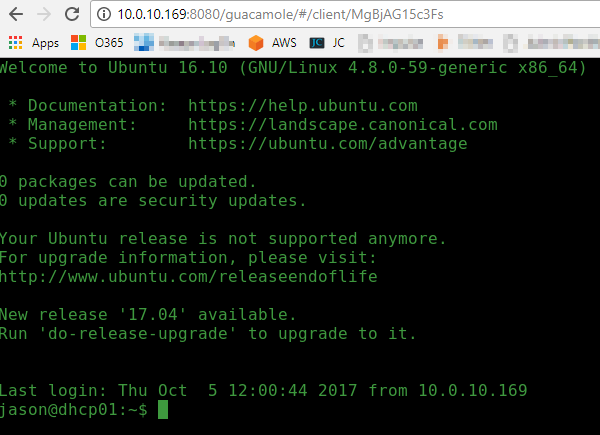

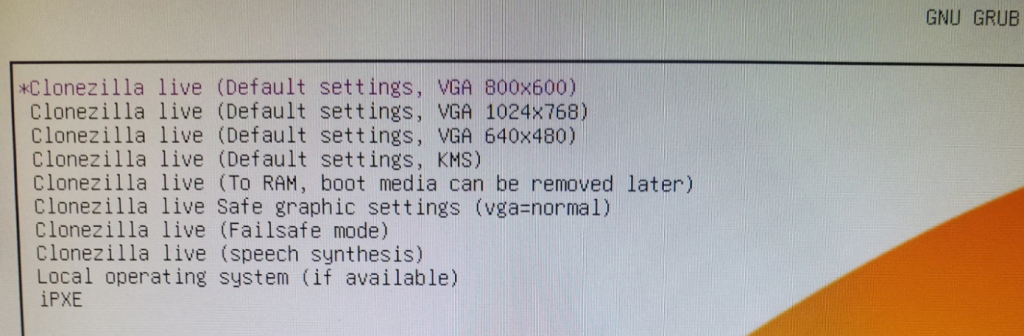

Clonezilla will boot from the USB drive so choose the default (hit Enter):

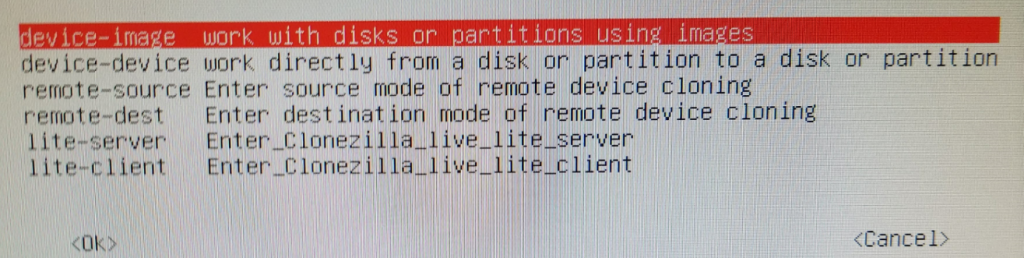

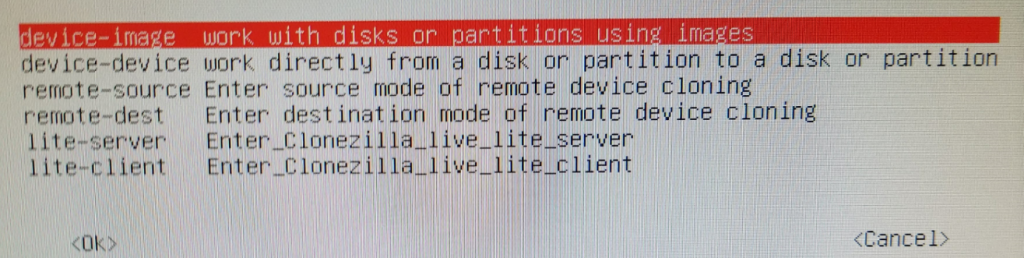

Select English > Don’t touch keymap > Start Clonezilla > device-image (Ok)

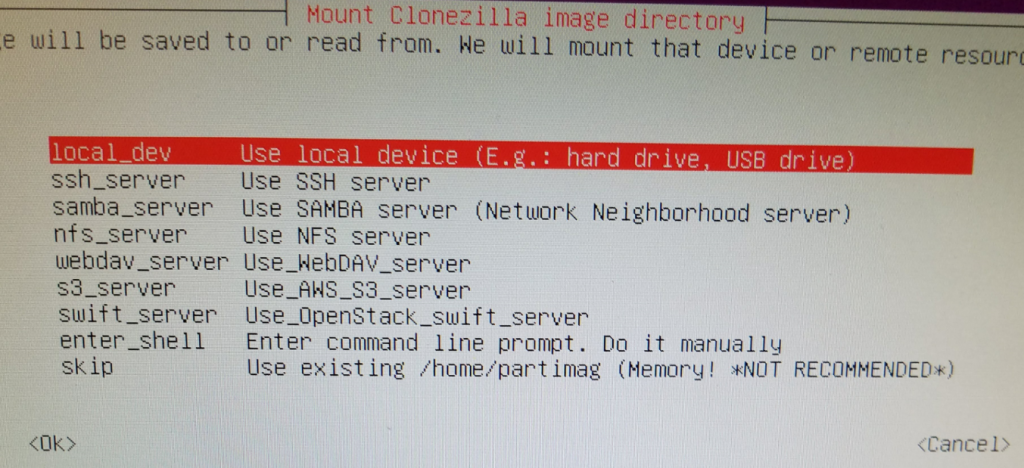

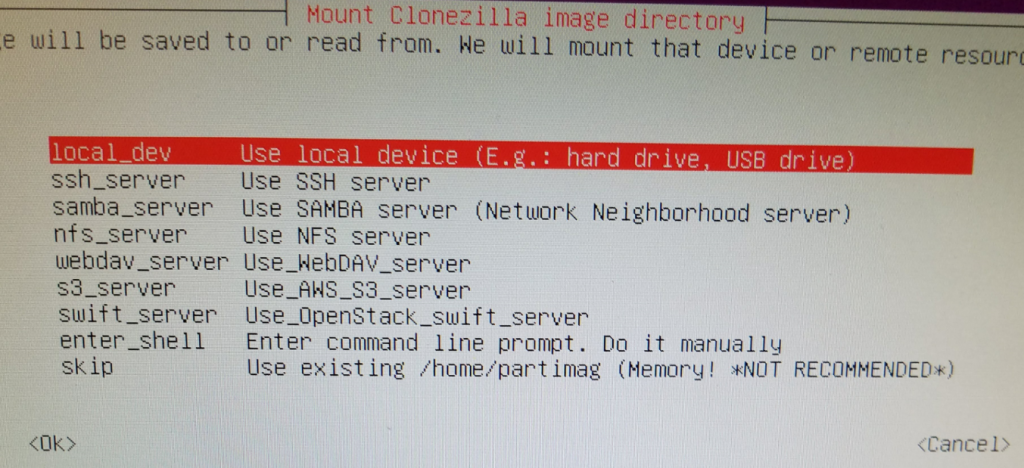

Under Mount Clonezilla image directory, choose Local_dev (Ok)

Press Enter to continue.

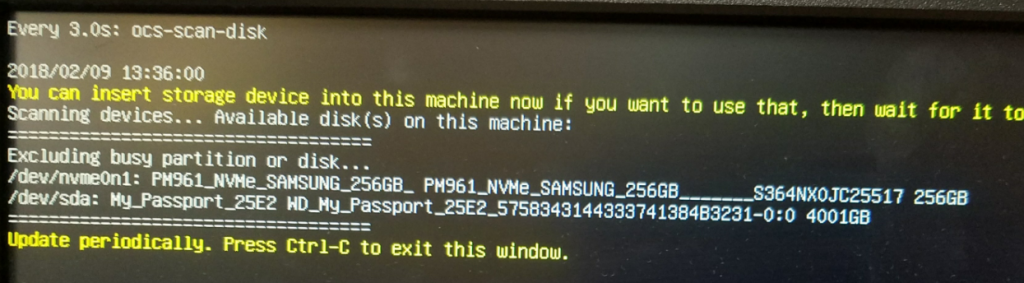

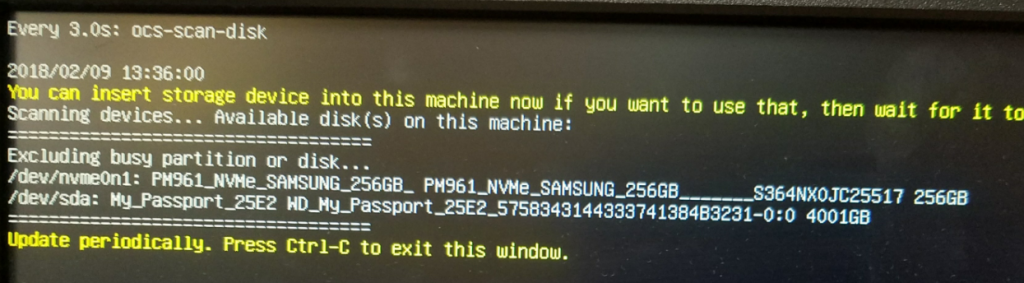

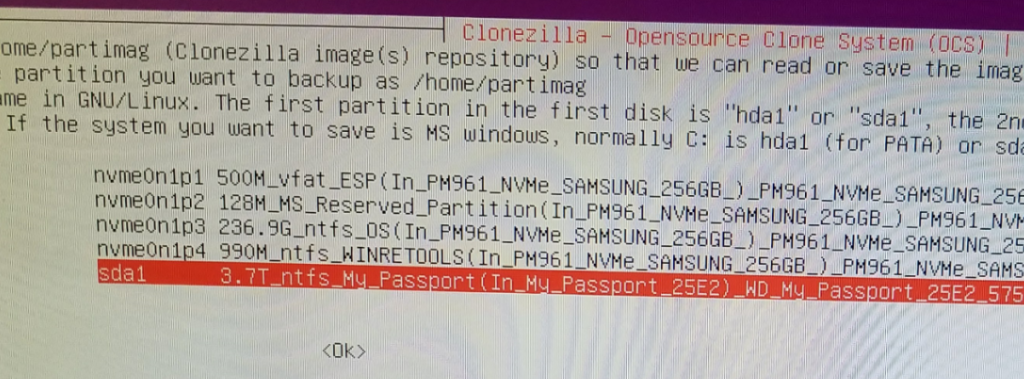

Review the clonezilla Scan disk preview to ensure it’s found both your Source and Target hard drives:

Press Ctrl-C to continue.

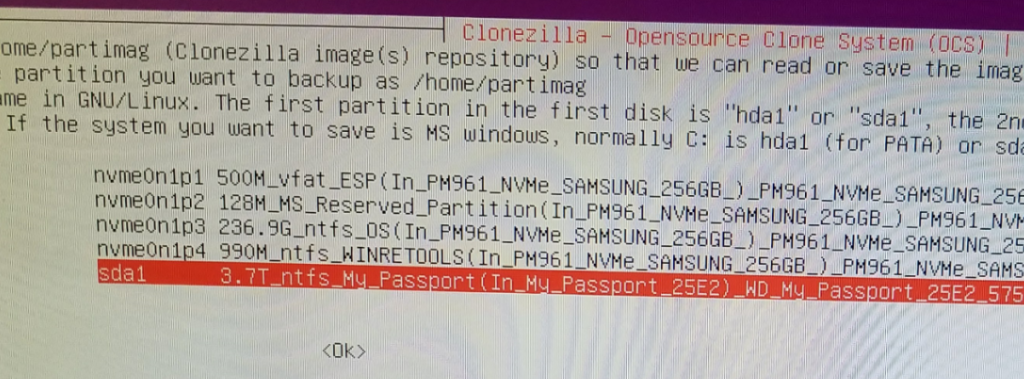

Arrow down and select your large external USB hard drive (sda1) to set the location of /home/partimg . This is where the clone image will be stored.

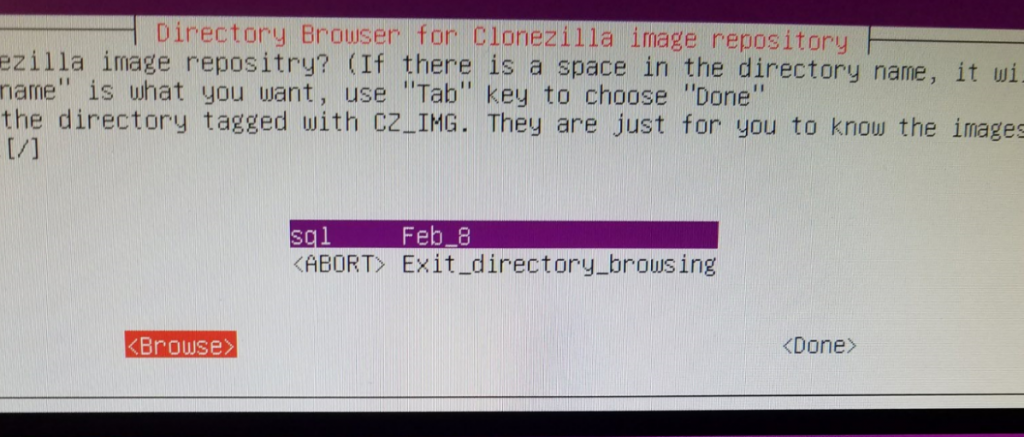

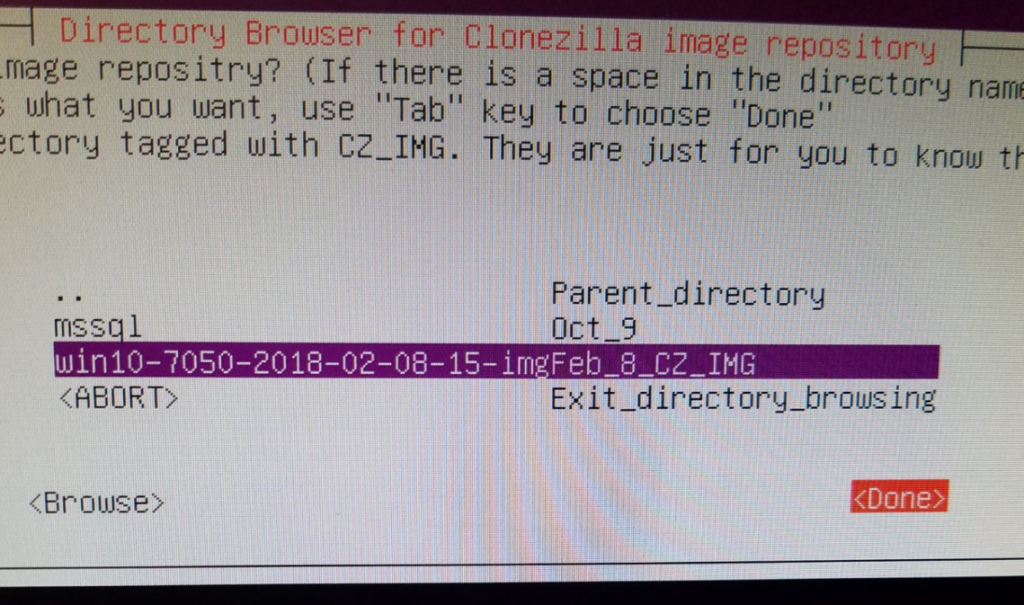

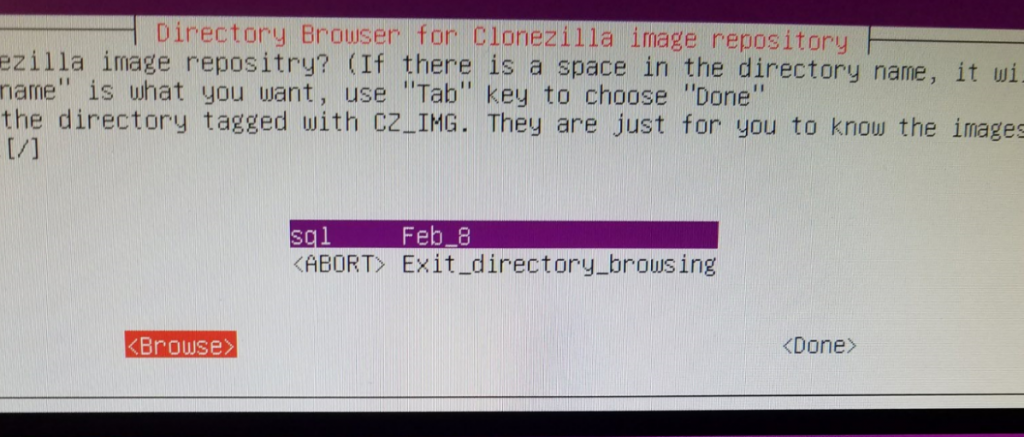

In the Directory Browser, hit “Browse” and go to your Parent Directory (top-most level) and select Done. This is where your image will be saved. You can see in my screenshot I’ve already saved an image here.

You will get a Summary location of Source (dev/sda1) and Target (/home/partimag). Press Enter to continue.

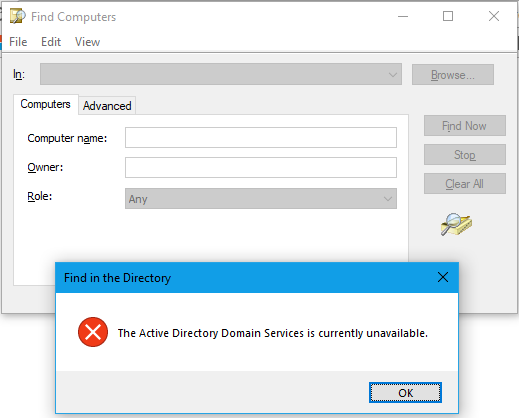

Choose Beginner mode

Choose Save Disk (Save_local_disk_as_an_image) – in my previous experience with Clonezilla, using normal spinning HDD’s and even SSD’s, I’ve used Samba to save my images to a separate server over the network using gigabit ethernet perfectly fine. However, in the case of these new computers and hard drives, I would get a permissions error when selecting SAMBA/SMB 2.1. The imaging would begin to take place and a couple smaller partitions would copy, but as soon as the primary large partition started it’s copy, I would get the permission error and the clone would halt. This is why we are using a local external USB hard drive.

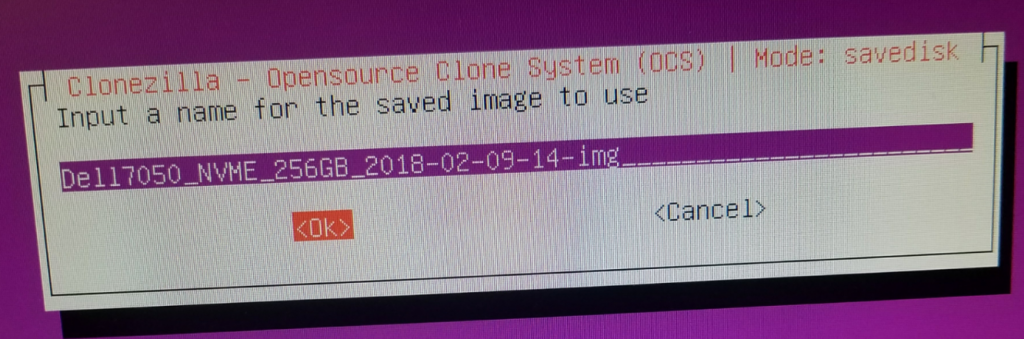

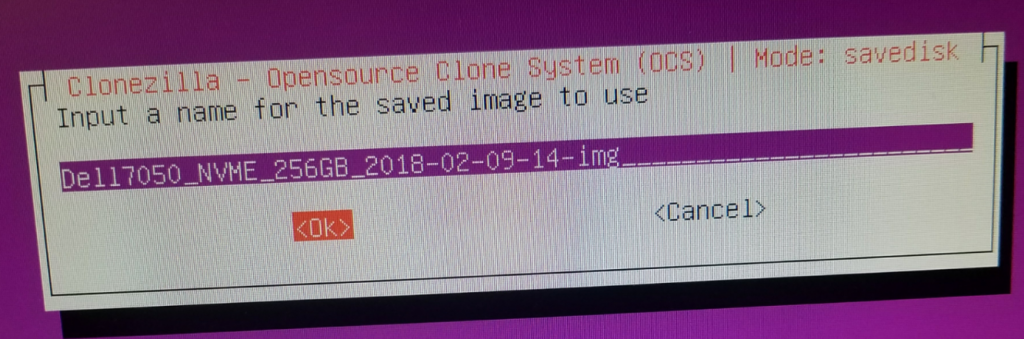

Give a descriptive name for the image (Dell7050_NVME_256GB_DATE-IMG) hit OK.

Select the local disk as source (should only be one here)

Select -sfsck (Skip Checking)

Select Yes, check the saved image

Select -senc Not to encrypt the image (or encrypt if desired)

Select Action to perform when everything is finished: -p power off.

Press Enter to continue, (Yes/Yes) – the image process will run and the image of the Source PC will be written to the External USB HDD. The machine should shut down when complete.

Image Target Computer

Now that we have our image saved on our external HDD, we can image our Target PC. On the powered-off PC, Connect the USB thumbdrive, External HDD, keyboard, mouse, and monitor, and again Boot into the BIOS.

On the new target computer, we want to again change the BIOS settings to mirror those we made in steps 11., 12., and 13.

After saving the BIOS, restart and hit F12 again, select the USB thumb drive, and boot Clonezilla.

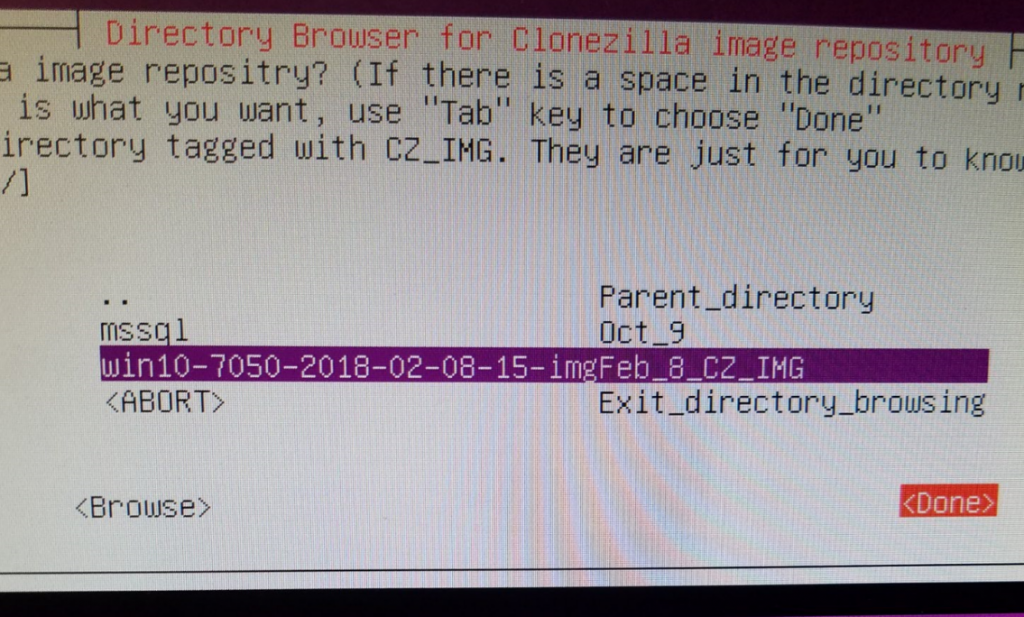

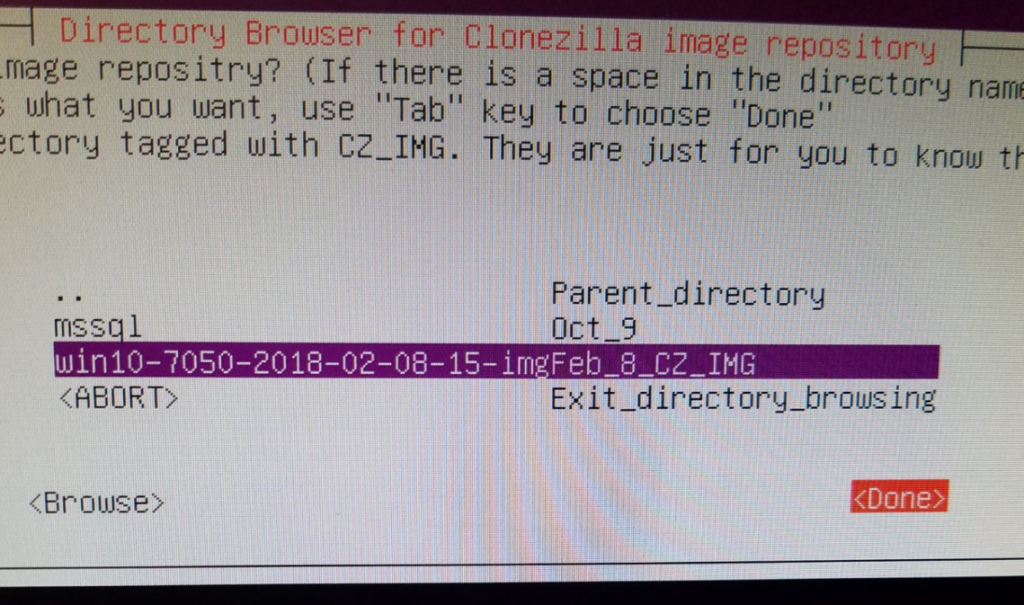

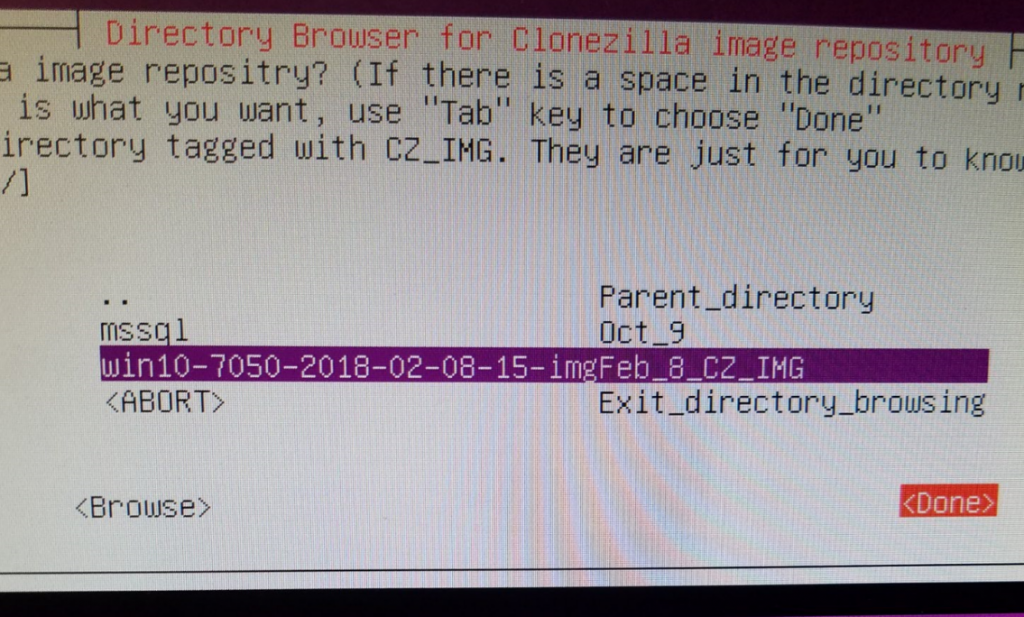

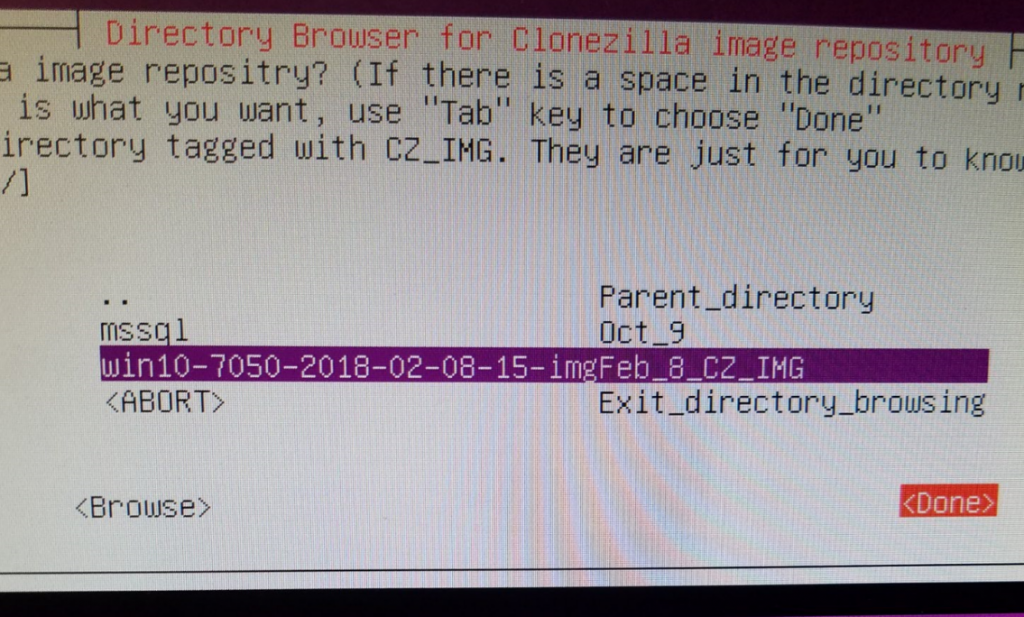

Start Clonezilla > Device Image > Local_dev > select image repository (sda1) > in Directory Browser, browse to the image we created, highlight it and select Done:

Choose Beginner Mode > Restore Disk:

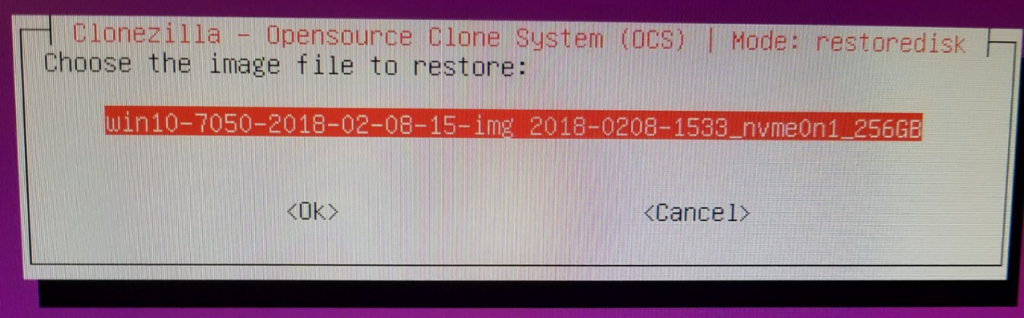

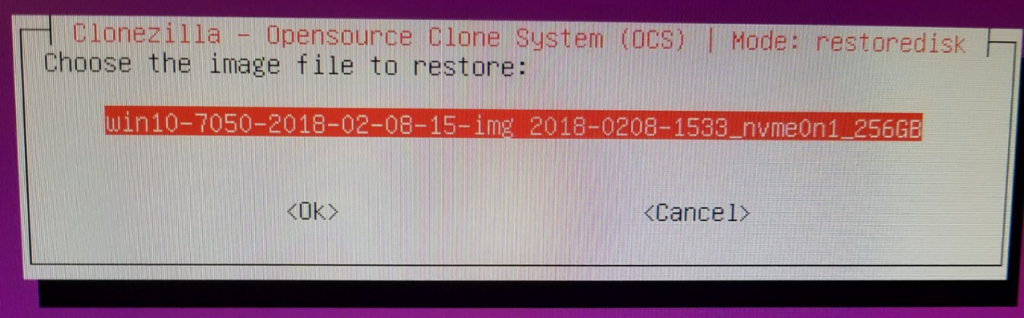

Choose the image to restore:

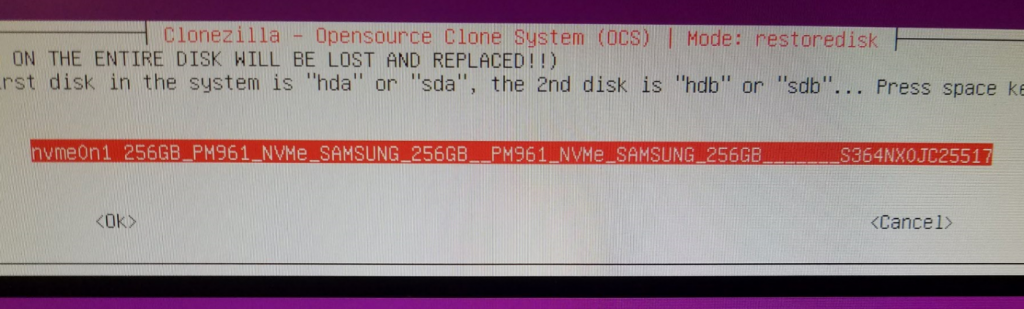

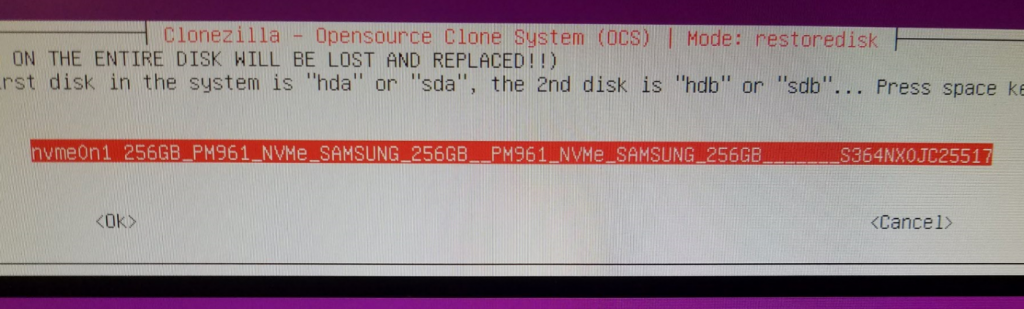

Select the target disk to restore onto (Should only be one listed here):

Select “Skip checking the image before restoring” > poweroff > Enter >

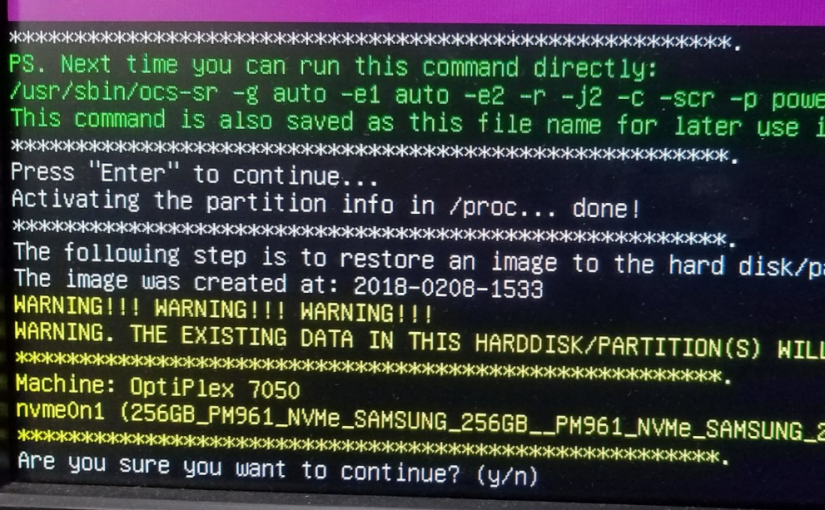

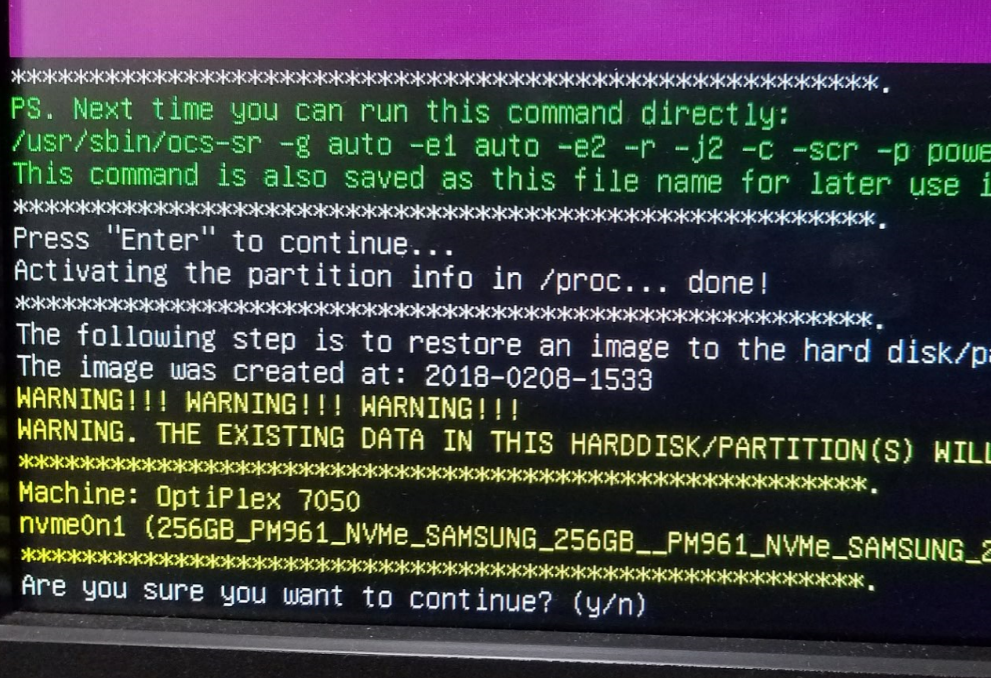

Heed the warning here. If important data is on the target disk, do not proceed. All data will be overwritten:

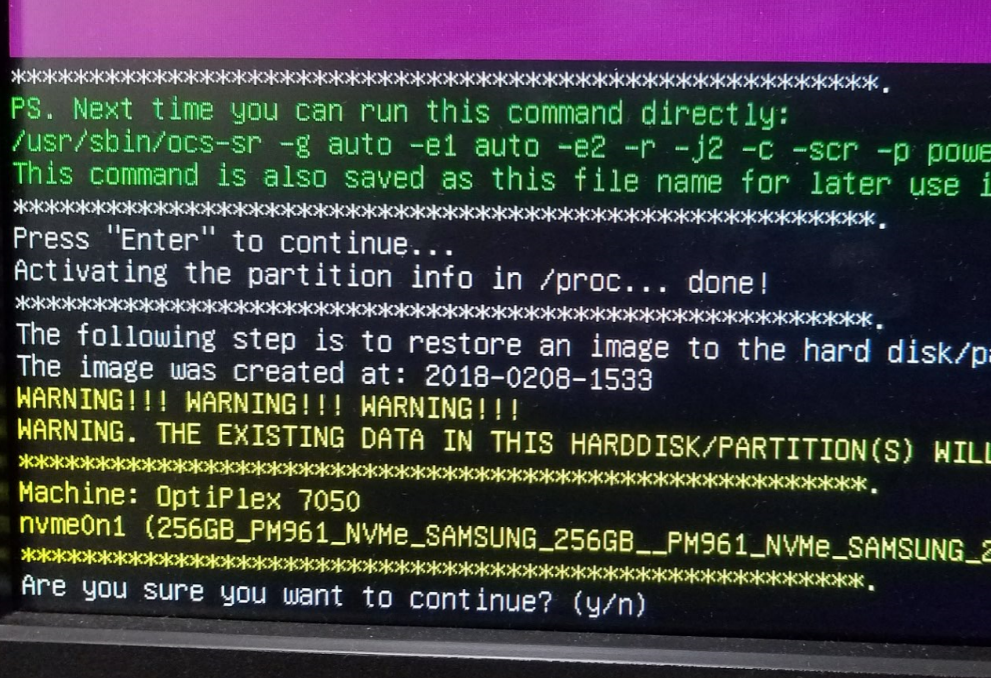

Hit y (enter) > y (enter) >

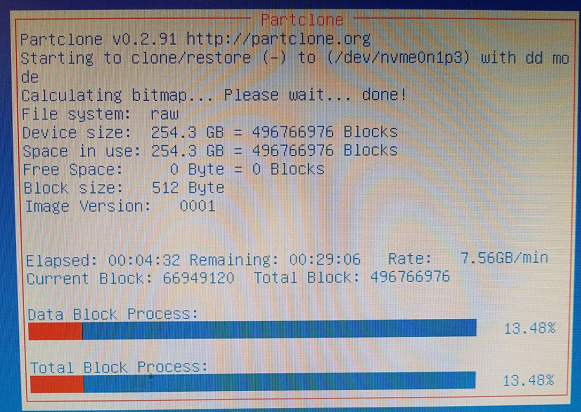

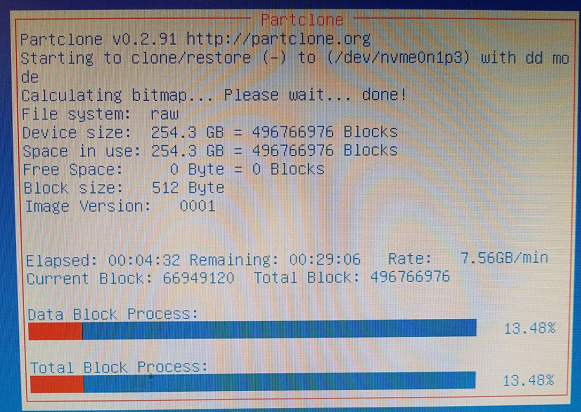

Partclone will run, clone the image to your disk, then shut down:

With the system powered down, remove your external HDD and boot thumb drive.

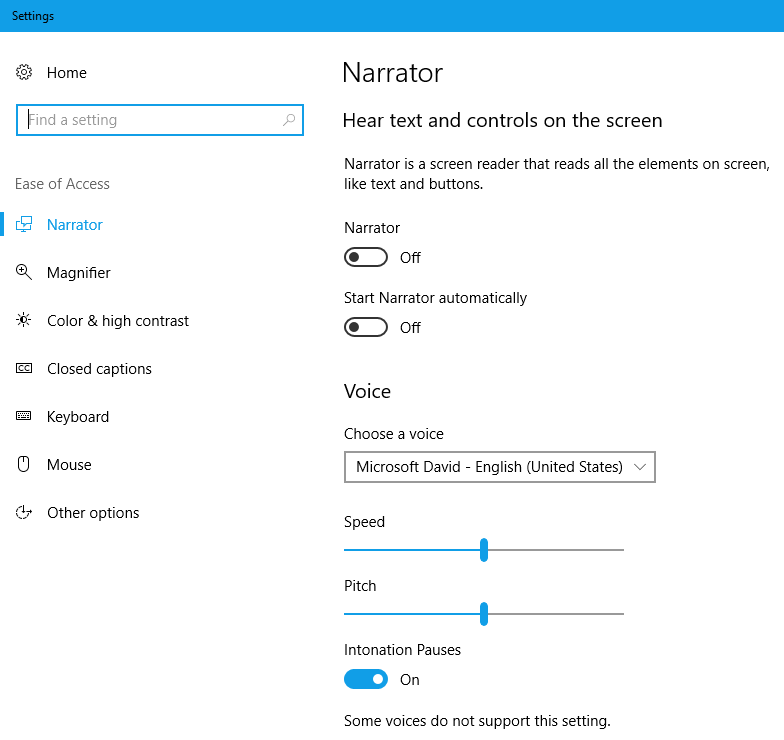

Power on the newly-imaged PC, hit the F12 button to go into the BIOS again. Reverse the changes made in steps 11, 12, and 13. Save the BIOS settings, and boot normally into windows. Congrats, you’re done! Hope this helps someone clone their newer systems with Clonezilla.